AI

AI and authenticity when sharing practice. Does any of it matter?

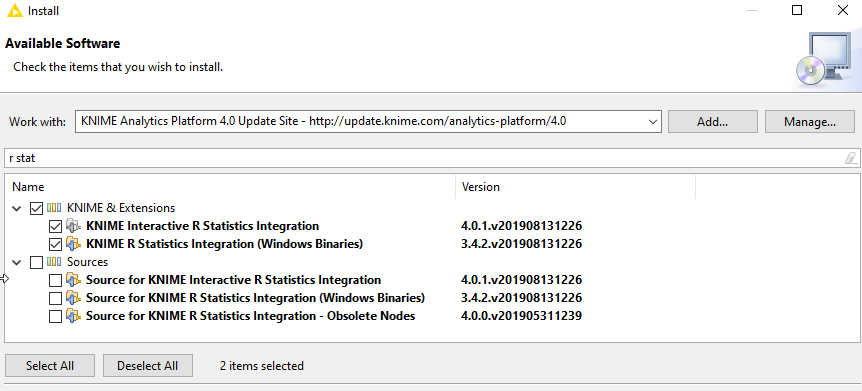

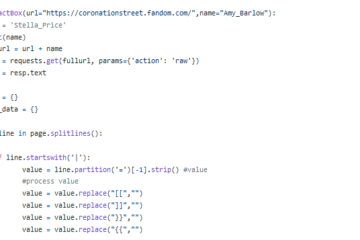

As part of my job in educational technology, I like to tinker with technology to find solutions to problems. I also like to share these tinkering with friends and colleagues. The way I would previously do this involved regularly blogging Read more…